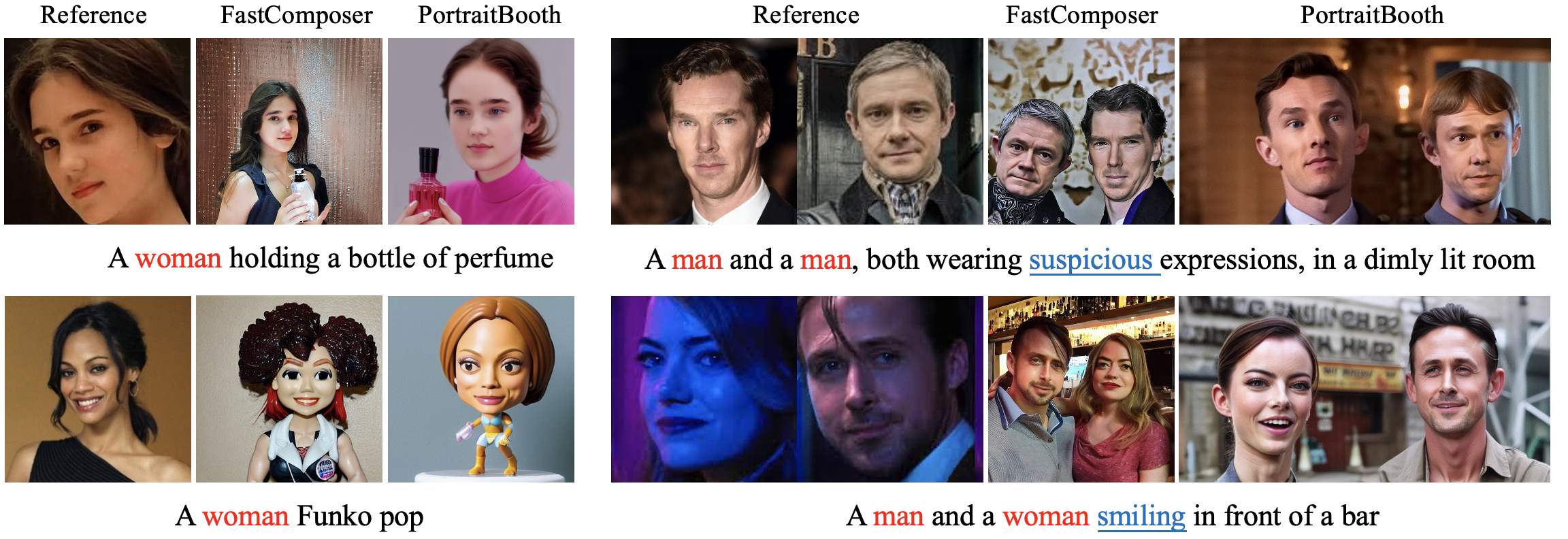

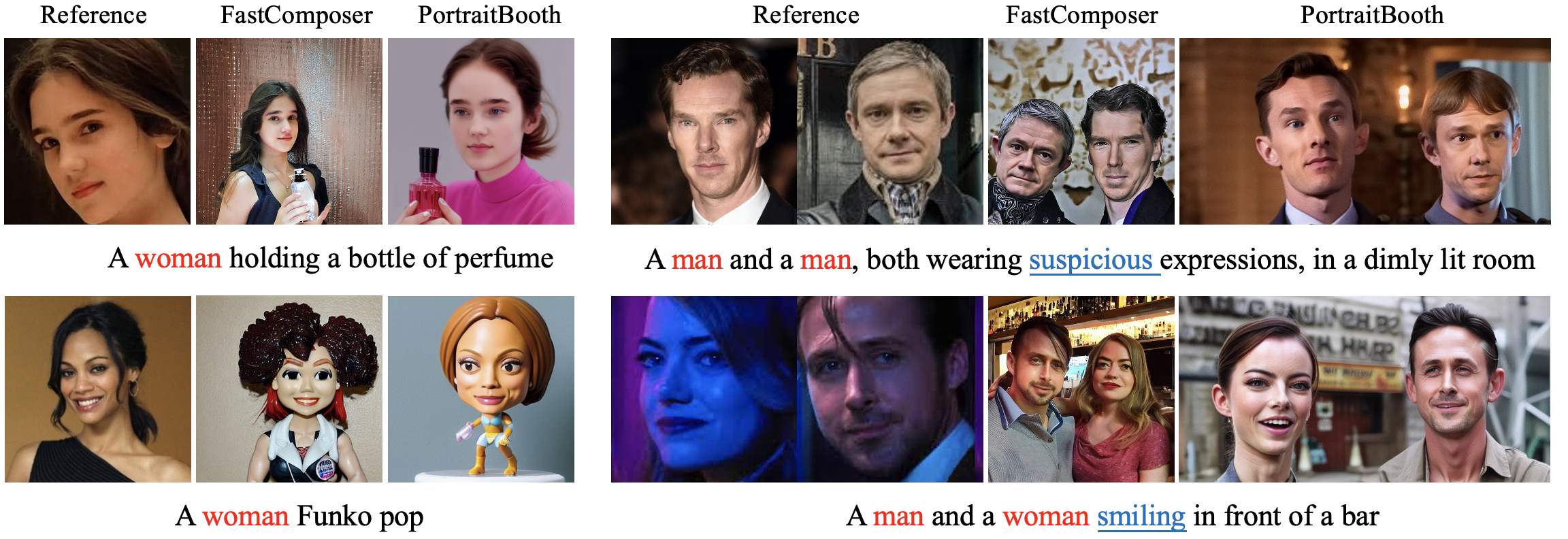

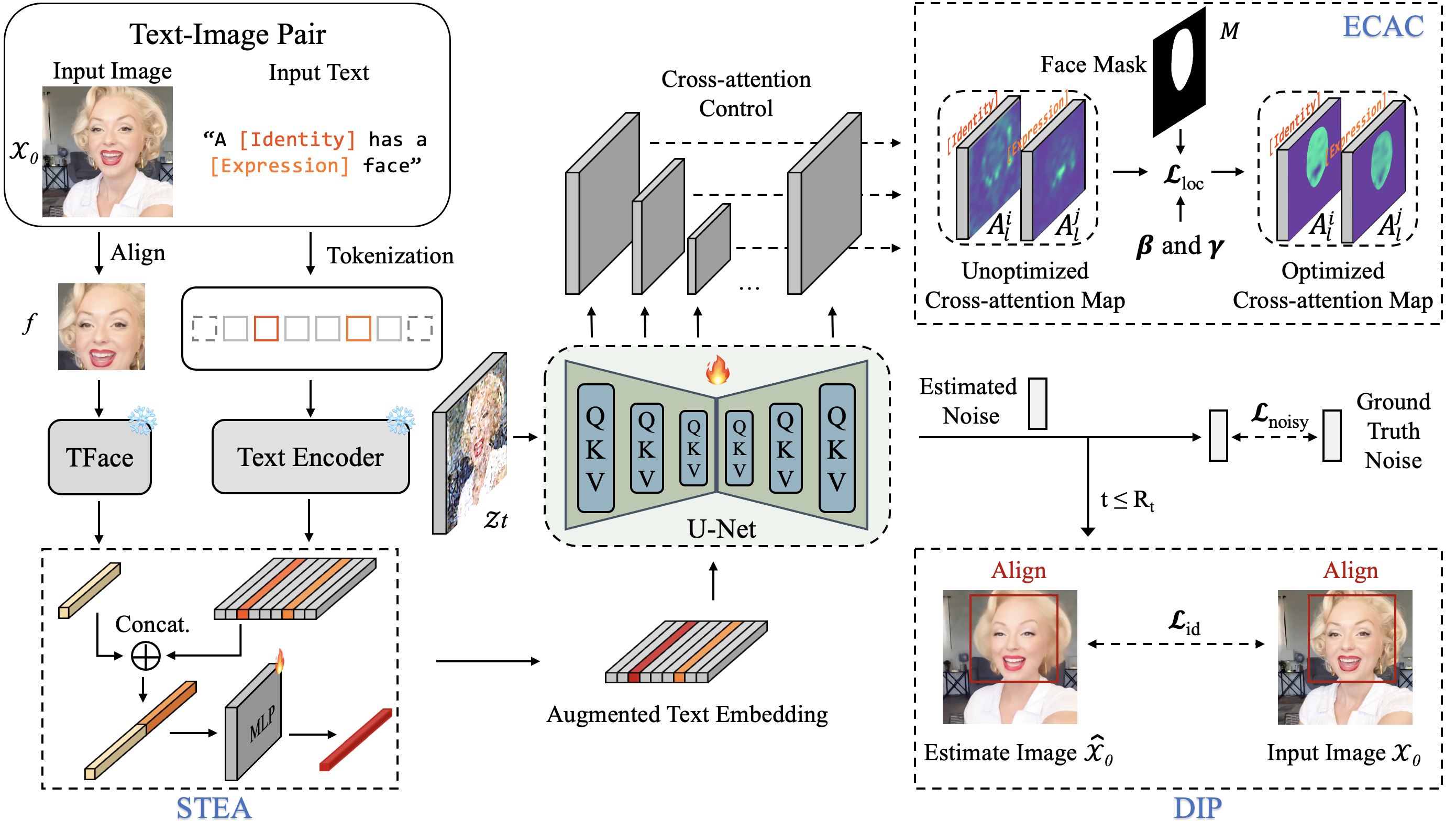

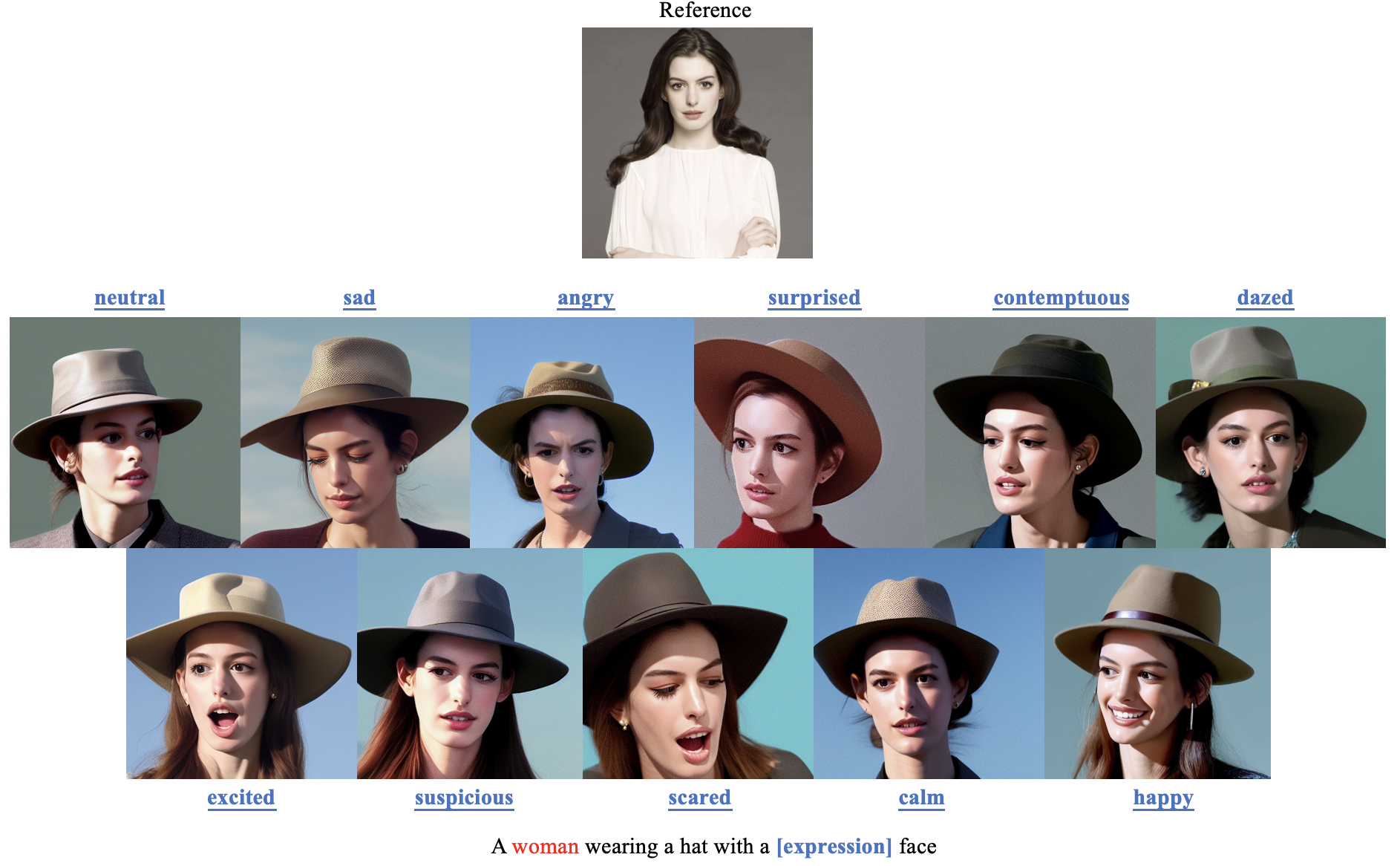

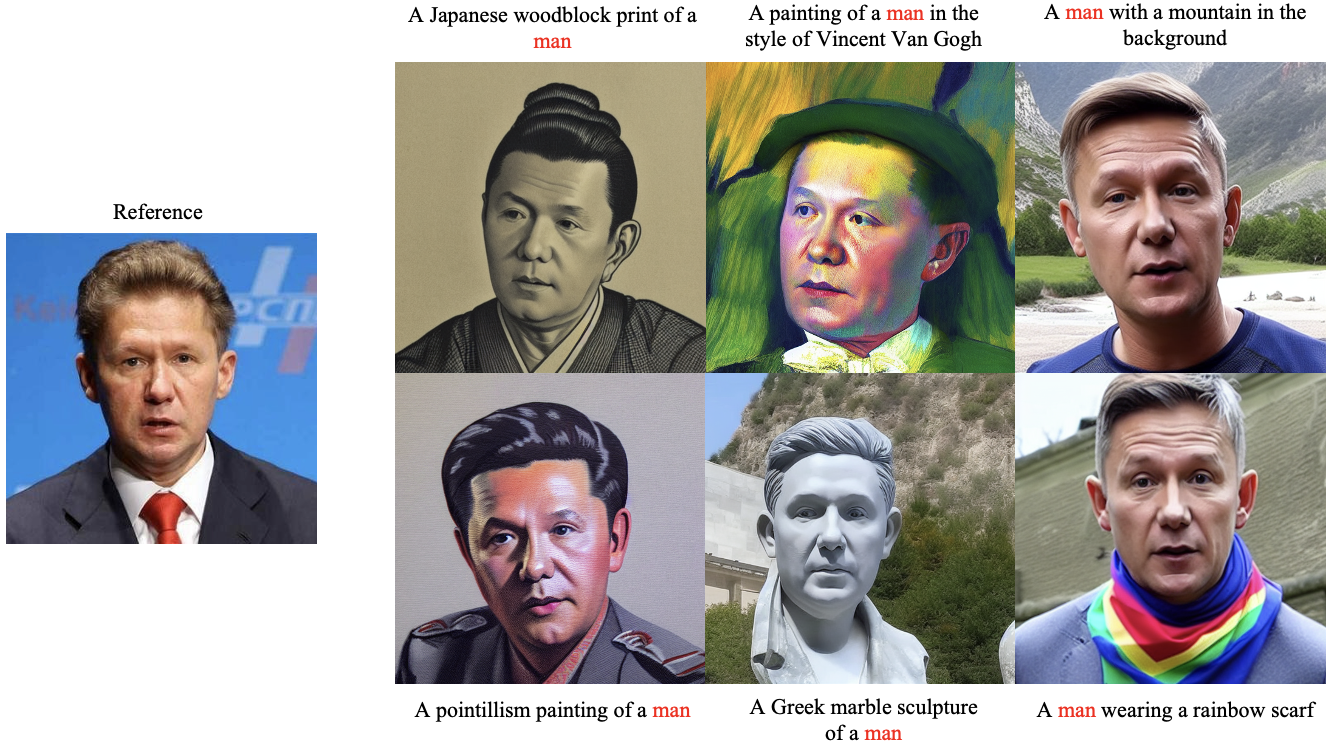

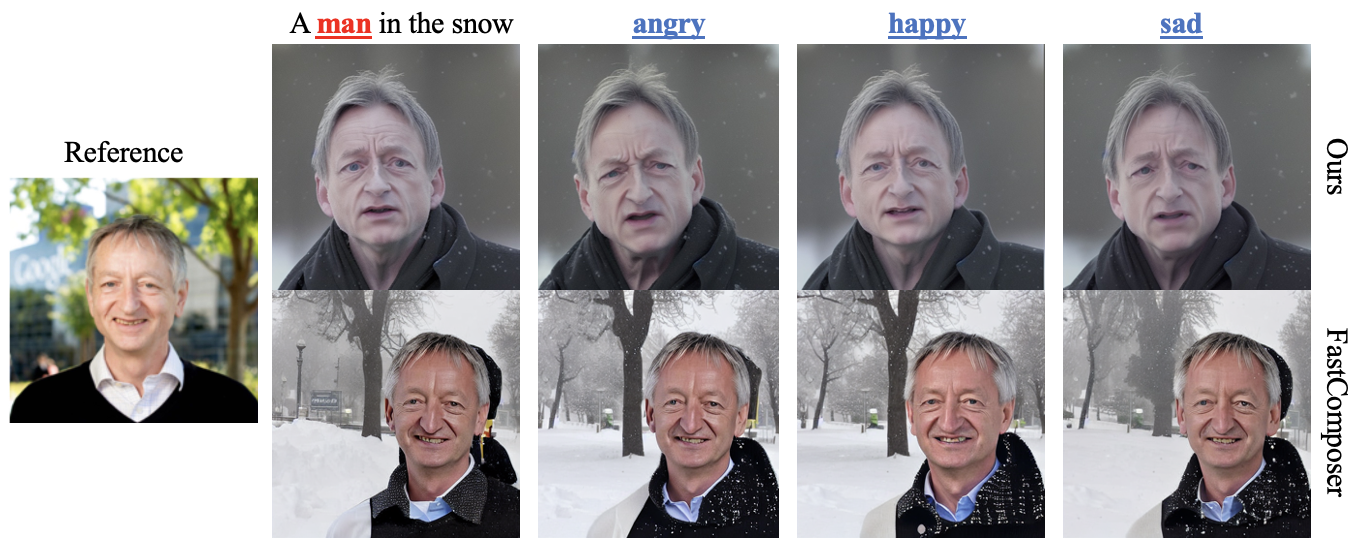

TL;DR:PortraitBooth enables text-to-portrait generation from a single image, preserving identity, promoting diverse expression editing, and supporting multi-subject generation with low training costs, eliminating the need for finetuning during inference.

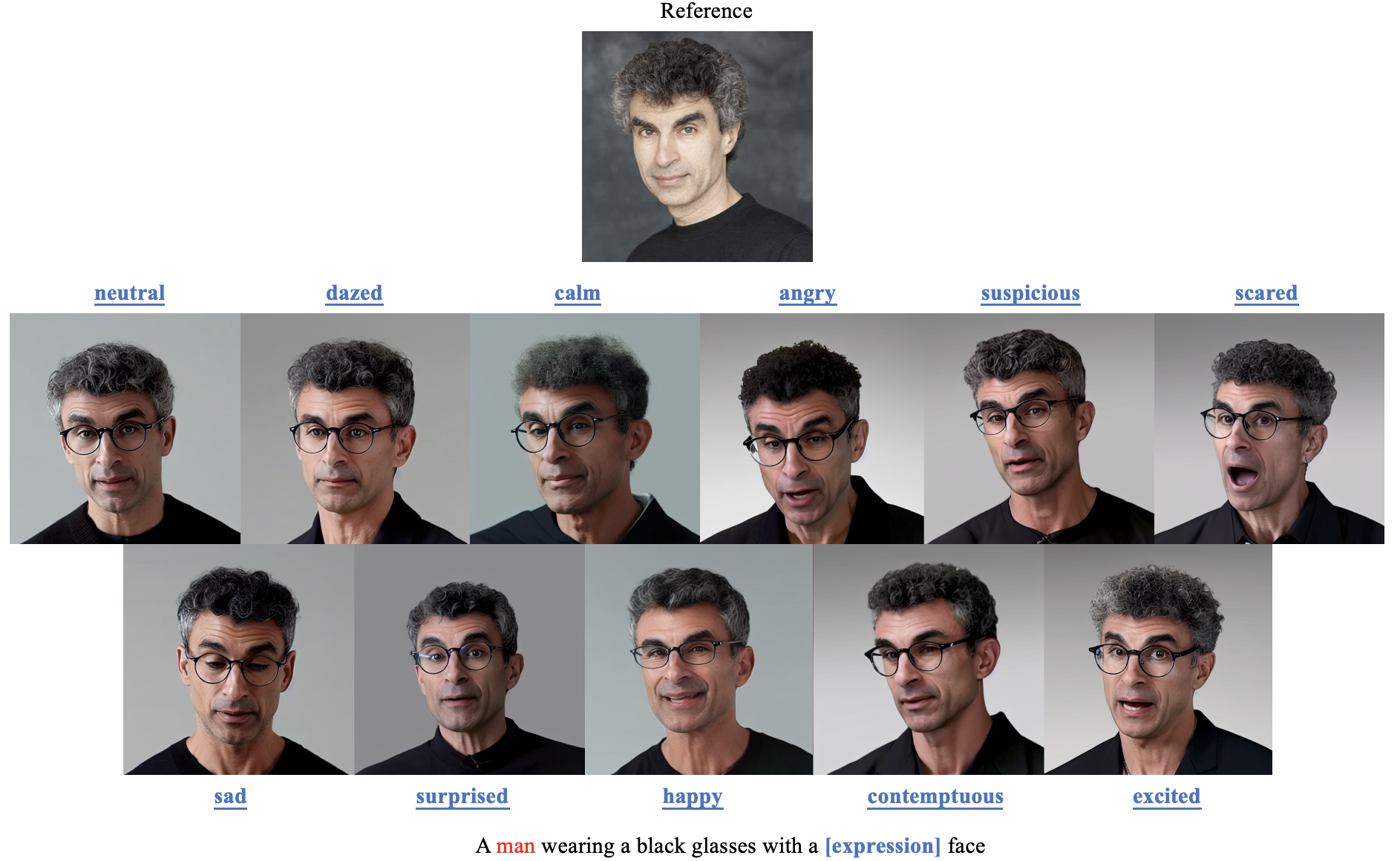

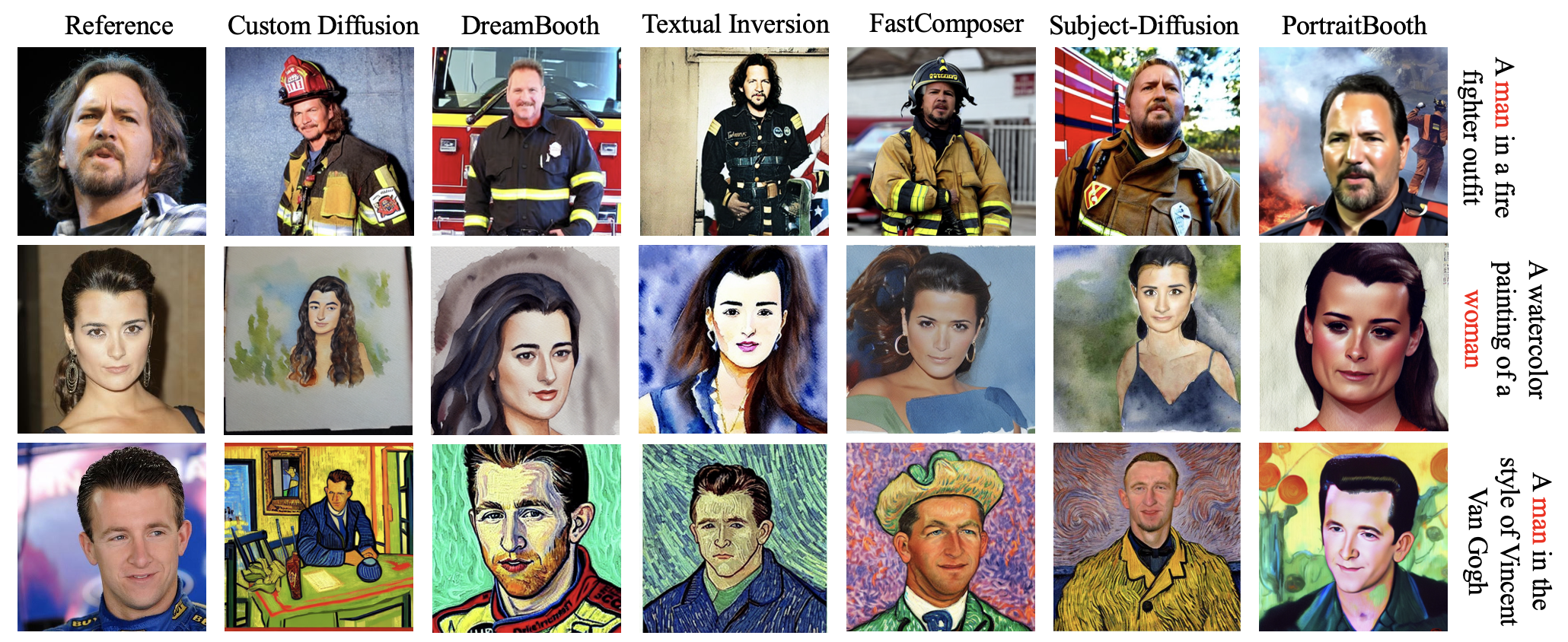

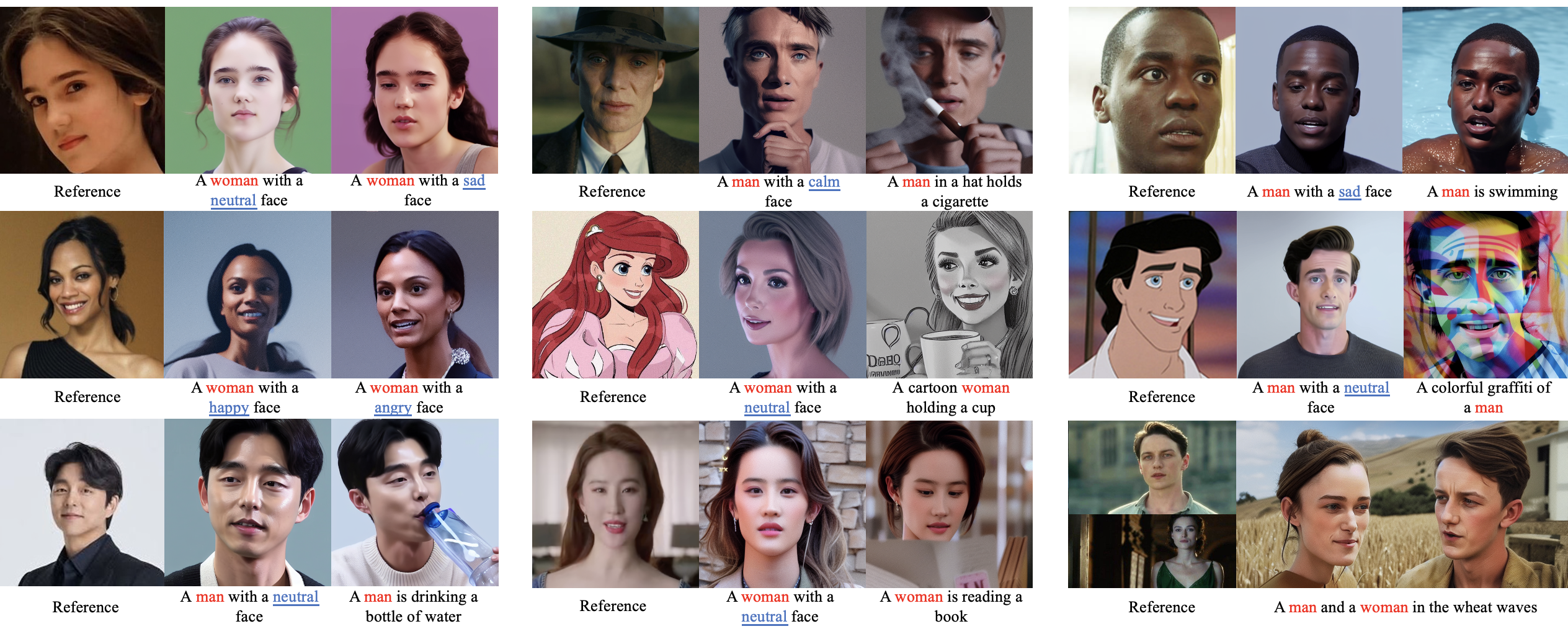

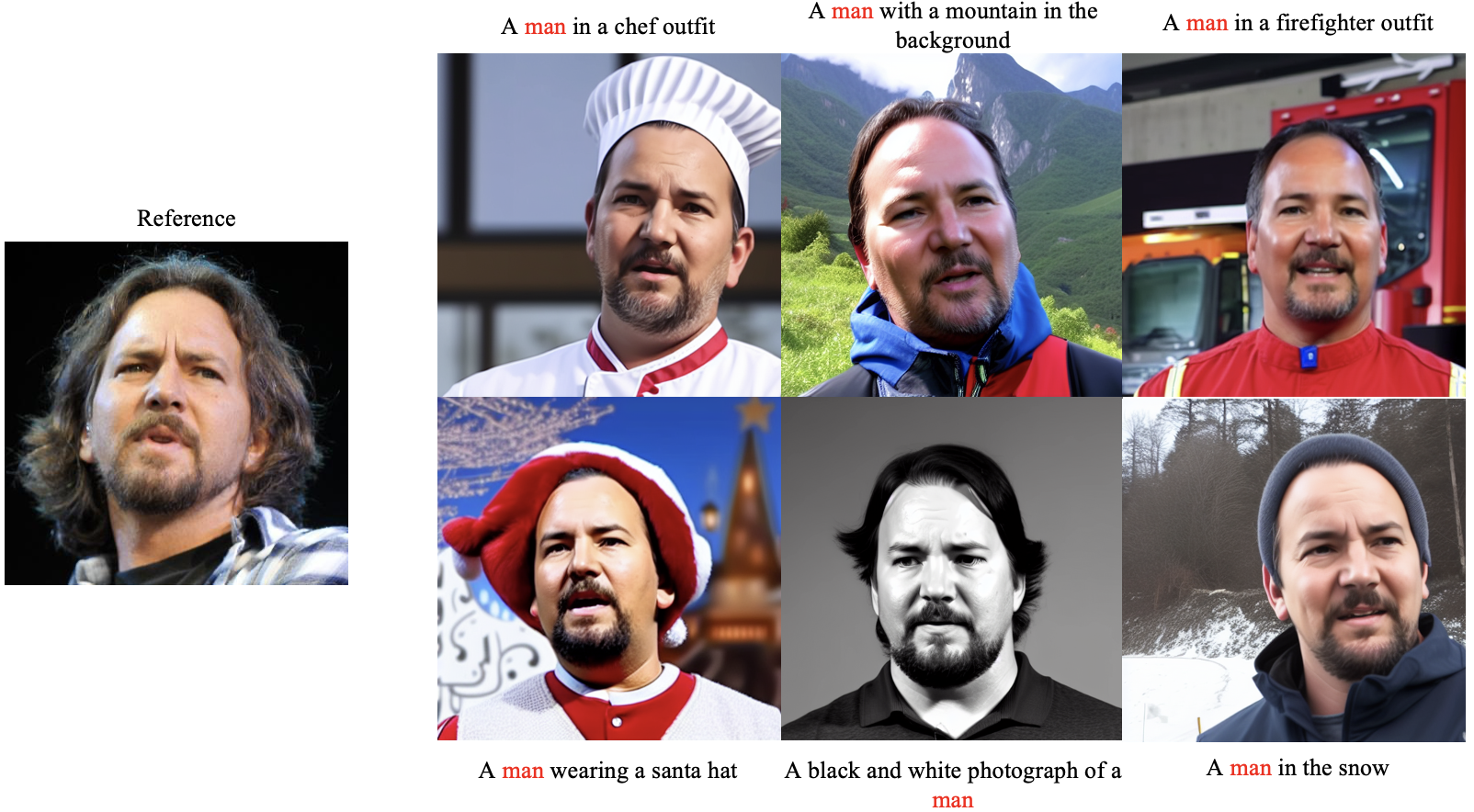

Recent advancements in personalized image generation using diffusion models have been noteworthy. However, existing methods suffer from inefficiencies due to the requirement for subject-specific fine-tuning. This computationally intensive process hinders efficient deployment, limiting practical usability. Moreover, these methods often grapple with identity distortion and limited expression diversity. In light of these challenges, we propose PortraitBooth, an innovative approach designed for high efficiency, robust identity preservation, and expression-editable text-to-image generation, without the need for fine-tuning. PortraitBooth leverages subject embeddings from a face recognition model for personalized image generation without fine-tuning. It eliminates computational overhead and mitigates identity distortion. The introduced dynamic identity preservation strategy further ensures close resemblance to the original image identity. Moreover, PortraitBooth incorporates emotion-aware cross-attention control for diverse facial expressions in generated images, supporting text-driven expression editing. Its scalability enables efficient and high-quality image creation, including multi-subject generation. Extensive results demonstrate superior performance over other state-of-the-art methods in both single and multiple image generation scenarios.

@article{peng2023portraitbooth,

title={PortraitBooth: A Versatile Portrait Model for Fast Identity-preserved Personalization},

author={Peng, Xu and Zhu, Junwei and Jiang, Boyuan and Tai, Ying and Luo, Donghao and Zhang, Jiangning and Lin, Wei and Jin, Taisong and Wang, Chengjie and Ji, Rongrong},

journal={arXiv preprint arXiv:2312.06354},

year={2023}

}